多模态语言与理解基准 | 原创,AI翻译

引言

本文评估了一个语言模型,使用MMLU(Massive Multitask Language Understanding)基准。

MMLU基准是一个全面的测试,用于评估模型在各种主题上的多任务处理能力。它包含多项选择题,涵盖了数学、历史、法律和医学等多个领域。

数据集链接:

llama-server

运行llama-server:

build/bin/llama-server -m models/7B/mistral-7b-instruct-v0.2.Q4_K_M.gguf --port 8080

MMLU 基准

此脚本使用三种不同的后端(ollama, llama-server 和 deepseek)评估MMLU基准。

运行MMLU基准代码:

import torch

from datasets import load_dataset

import requests

import json

from tqdm import tqdm

import argparse

import os

from openai import OpenAI

from dotenv import load_dotenv

import time

import random

load_dotenv()

# 设置参数解析

parser = argparse.ArgumentParser(description="使用不同后端评估MMLU数据集。")

parser.add_argument("--type", type=str, default="ollama", choices=["ollama", "llama", "deepseek", "gemini", "mistral"], help="后端类型: ollama, llama, deepseek, gemini 或 mistral")

parser.add_argument("--model", type=str, default="", help="模型名称")

args = parser.parse_args()

# 加载MMLU数据集

subject = "college_computer_science" # 选择您的主题

dataset = load_dataset("cais/mmlu", subject, split="test")

# 使用一项示例格式化提示

def format_mmlu_prompt(example):

prompt = f"问题: {example['question']}\n"

prompt += "选择:\n"

for i, choice in enumerate(example['choices']):

prompt += f"{chr(ord('A') + i)}. {choice}\n"

prompt += "给出你的答案。只给出选择。\n"

return prompt

# 如果需要,初始化DeepSeek客户端

def initialize_deepseek_client():

api_key = os.environ.get("DEEPSEEK_API_KEY")

if not api_key:

print("错误: 环境变量DEEPSEEK_API_KEY未设置。")

exit()

return OpenAI(api_key=api_key, base_url="https://api.deepseek.com")

def call_gemini_api(prompt, retries=3, backoff_factor=1):

gemini_api_key = os.environ.get("GEMINI_API_KEY")

if not gemini_api_key:

print("错误: 环境变量GEMINI_API_KEY未设置。")

exit()

url = f"https://generativelanguage.googleapis.com/v1beta/models/gemini-1.5-flash:generateContent"

params = {"key": gemini_api_key}

payload = {"contents": [{"parts": [{"text": prompt}]}]}

print(f"输入到Gemini API: {payload}")

for attempt in range(retries):

response = requests.post(url, json=payload, params=params)

response_json = response.json()

print(response_json)

if response.status_code == 200:

return response_json

elif response.status_code == 429:

time.sleep(backoff_factor * (2 ** attempt)) # 指数退避

else:

raise Exception(f"Gemini API 错误: {response.status_code} - {response_json}")

return None

def call_mistral_api(prompt, model="mistral-small-2501", process_response=True):

api_key = os.environ.get("MISTRAL_API_KEY")

if not api_key:

print("错误: 环境变量MISTRAL_API_KEY未设置。")

return None

url = "https://api.mistral.ai/v1/chat/completions"

headers = {

"Content-Type": "application/json",

"Accept": "application/json",

"Authorization": f"Bearer {api_key}"

}

data = {

"model": model,

"messages": [

{

"role": "user",

"content": prompt

}

]

}

print(f"输入到Mistral API: {data}")

print(f"Mistral API URL: {url}")

print(f"Mistral API Headers: {headers}")

try:

response = requests.post(url, headers=headers, json=data)

response.raise_for_status()

response_json = response.json()

print(response_json)

if response_json and response_json['choices']:

content = response_json['choices'][0]['message']['content']

if process_response:

return process_mistral_response(content)

else:

return content

else:

print(f"Mistral API 错误: 无效的响应格式: {response_json}")

return None

except requests.exceptions.RequestException as e:

print(f"Mistral API 错误: {e}")

stre = f"{e}"

if '429' in stre:

print("请求过多, 睡眠10秒后重试")

time.sleep(10)

return call_mistral_api(prompt, model, process_response)

raise e

import re

def process_ollama_response(response):

if response.status_code == 200:

print(f"API输出: {response.json()}")

output_text = response.json()["choices"][0]["message"]["content"]

match = re.search(r"答案:\s*([A-D])", output_text, re.IGNORECASE)

if not match:

match = re.search(r"\*\*答案\*\*:\s*([A-D])", output_text, re.IGNORECASE)

if not match:

match = re.search(r"正确答案是\s*([A-D])", output_text, re.IGNORECASE)

if not match:

match = re.search(r"正确选择是\s*([A-D])", output_text, re.IGNORECASE)

if not match:

match = re.search(r"正确选择应该是\s*([A-D])", output_text, re.IGNORECASE)

if not match:

match = re.search(r"答案是\s*([A-D])", output_text, re.IGNORECASE)

if not match:

match = re.search(r"答案似乎是\s*([A-D])", output_text, re.IGNORECASE)

if not match:

match = re.search(r"正确答案应该是\s*([A-D])", output_text, re.IGNORECASE)

if not match:

match = re.search(r"正确答案应该是\s*([A-D])", output_text, re.IGNORECASE)

if match:

predicted_answer = match.group(1).upper()

else:

stripped_output = output_text.strip()

if len(stripped_output) > 0:

first_word = stripped_output.split(" ")[0]

if len(first_word) == 1:

predicted_answer = first_word

else:

first_word_comma = stripped_output.split(",")[0]

if len(first_word_comma) == 1:

predicted_answer = first_word_comma

else:

first_word_period = stripped_output.split(".")[0]

if len(first_word_period) == 1:

predicted_answer = first_word_period

else:

print(f"无法从输出中提取单个字符答案: {output_text},返回随机答案")

predicted_answer = random.choice(["A", "B", "C", "D"])

else:

predicted_answer = ""

return predicted_answer

else:

print(f"错误: {response.status_code} - {response.text}")

return ""

def process_llama_response(response):

if response.status_code == 200:

output_text = response.json()["choices"][0]["message"]["content"]

predicted_answer = output_text.strip()[0] if len(output_text.strip()) > 0 else ""

print(f"API输出: {output_text}")

return predicted_answer

else:

print(f"错误: {response.status_code} - {response.text}")

return ""

def process_deepseek_response(client, prompt, model="deepseek-chat", retries=3, backoff_factor=1):

print(f"输入到Deepseek API: {prompt}")

for attempt in range(retries):

try:

response = client.chat.completions.create(

model=model,

messages=[

{"role": "user", "content": prompt}

],

max_tokens=100

)

if response and response.choices:

output_text = response.choices[0].message.content.strip()

predicted_answer = output_text.strip()[0] if len(output_text.strip()) > 0 else ""

print(f"API输出: {output_text}")

return predicted_answer

else:

print("错误: 无API响应。")

return ""

except Exception as e:

if "502" in str(e):

print(f"网关错误(502)期间的API调用,重试在{backoff_factor * (2 ** attempt)}秒...")

time.sleep(backoff_factor * (2 ** attempt))

else:

print(f"API调用期间错误: {e}")

return ""

return ""

def process_mistral_response(response):

if response:

output_text = response.strip()

predicted_answer = output_text.strip()[0] if len(output_text.strip()) > 0 else ""

print(f"API输出: {output_text}")

return predicted_answer

else:

print("错误: 无Mistral API响应")

return ""

def process_gemini_response(prompt):

json_response = call_gemini_api(prompt)

if not json_response:

print("重试后无Gemini API响应。")

return ""

if 'candidates' not in json_response or not json_response['candidates']:

print("未在响应中找到候选项,重试...")

json_response = call_gemini_api(prompt)

print(json_response)

if not json_response or 'candidates' not in json_response or not json_response['candidates']:

print("重试后在响应中未找到候选项。")

return ""

first_candidate = json_response['candidates'][0]

if 'content' in first_candidate and 'parts' in first_candidate['content']:

first_part = first_candidate['content']['parts'][0]

if 'text' in first_part:

output_text = first_part['text']

predicted_answer = output_text.strip()[0] if len(output_text.strip()) > 0 else ""

print(f"API输出: {output_text}")

return predicted_answer

else:

print("未在响应中找到文本")

return ""

else:

print("意外的响应格式: 内容或部分缺失")

return ""

def _call_ollama_api(prompt, model):

url = "http://localhost:11434/v1/chat/completions"

data = {

"messages": [{"role": "user", "content": prompt}],

"model": model,

"max_tokens": 300

}

headers = {"Content-Type": "application/json"}

print(f"输入到API: {data}")

response = requests.post(url, headers=headers, data=json.dumps(data))

return process_ollama_response(response)

def _call_llama_api(prompt):

url = "http://localhost:8080/v1/chat/completions"

data = {

"messages": [{"role": "user", "content": prompt}]

}

headers = {"Content-Type": "application/json"}

print(f"输入到API: {data}")

response = requests.post(url, headers=headers, data=json.dumps(data))

return process_llama_response(response)

def _get_predicted_answer(args, prompt, client):

predicted_answer = ""

if args.type == "ollama":

predicted_answer = _call_ollama_api(prompt, args.model)

elif args.type == "llama":

predicted_answer = _call_llama_api(prompt)

elif args.type == "deepseek":

predicted_answer = process_deepseek_response(client, prompt, args.model)

elif args.type == "gemini":

predicted_answer = process_gemini_response(prompt)

elif args.type == "mistral":

predicted_answer = call_mistral_api(prompt, args.model)

else:

raise ValueError("无效的后端类型")

return predicted_answer

def evaluate_model(args, dataset):

correct = 0

total = 0

client = None

if args.type == "deepseek":

client = initialize_deepseek_client()

if args.model == "":

if args.type == "ollama":

args.model = "mistral:7b"

elif args.type == "deepseek":

args.model = "deepseek-chat"

elif args.type == "mistral":

args.model = "mistral-small-latest"

for i, example in tqdm(enumerate(dataset), total=len(dataset), desc="评估中"):

prompt = format_mmlu_prompt(example)

predicted_answer = _get_predicted_answer(args, prompt, client)

answer_map = {0: "A", 1: "B", 2: "C", 3: "D"}

ground_truth_answer = answer_map.get(example["answer"], "")

is_correct = predicted_answer.upper() == ground_truth_answer

if is_correct:

correct += 1

total += 1

print(f"问题: {example['question']}")

print(f"选择: A. {example['choices'][0]}, B. {example['choices'][1]}, C. {example['choices'][2]}, D. {example['choices'][3]}")

print(f"预测答案: {predicted_answer}, 真实答案: {ground_truth_answer}, 正确: {is_correct}")

print("-" * 30)

if (i+1) % 10 == 0:

accuracy = correct / total

print(f"处理了 {i+1}/{len(dataset)}。当前准确率: {accuracy:.2%} ({correct}/{total})")

return correct, total

# 评估循环

correct, total = evaluate_model(args, dataset)

# 计算准确率

accuracy = correct / total

print(f"主题: {subject}")

print(f"准确率: {accuracy:.2%} ({correct}/{total})")

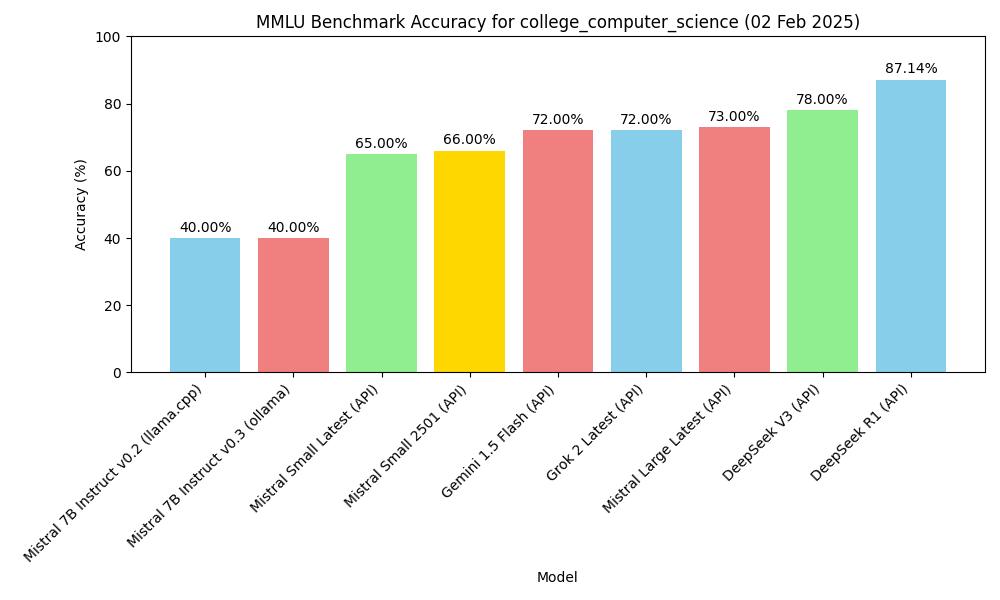

结果

零样本评估

| 模型 | 方式 | 主题 | 准确率 |

|---|---|---|---|

| mistral-7b-instruct-v0.2, Q4_K_M | macOS m2, 16GB, llama-server | MMLU college_computer_science | 40.00% (40/100) |

| Mistral-7B-Instruct-v0.3, Q4_0 | macOS m2, 16GB, ollama | MMLU college_computer_science | 40.00% (40/100) |

| deepseek v3 (API) | API, 2025.1.25 | MMLU college_computer_science | 78.00% (78/100) |

| gemini-1.5-flash (API) | API, 2025.1.25 | MMLU college_computer_science | 72.00% (72/100) |

| deepseek r1 (API) | API, 2025.1.26 | MMLU college_computer_science | 87.14% (61/70) |

| Mistral Small Latest (API) | API, 2025.01.31 | MMLU college_computer_science | 65.00% (65/100) |

| Mistral Large Latest (API) | API, 2025.01.31 | MMLU college_computer_science | 73.00% (73/100) |

| Mistral Small 2501 (API) | API, 2025.01.31 | MMLU college_computer_science | 66.00% (66/100) |

| Grok 2 Latest | API, 2025.02.02 | MMLU college_computer_science | 72.00% (72/100) |

图表

基于上表创建图表。

import matplotlib.pyplot as plt

import os

# 示例数据(用您的实际数据替换)

models = ['mistral-7b-instruct-v0.2 (llama.cpp)', 'Mistral-7B-Instruct-v0.3 (ollama)', 'deepseek v3 (API)', 'gemini-1.5-flash (API)', 'deepseek r1 (API)']

accuracy = [40.00, 40.00, 78.00, 72.00, 87.14]

subject = "college_computer_science"

# 创建柱状图

plt.figure(figsize=(10, 6))

plt.bar(models, accuracy, color=['skyblue', 'lightcoral', 'lightgreen', 'gold', 'lightcoral'])

plt.xlabel('模型')

plt.ylabel('准确率 (%)')

plt.title(f'MMLU基准准确率({subject})')

plt.ylim(0, 100) # 设置y轴限制为0-100以表示百分比

plt.xticks(rotation=45, ha="right") # 旋转x轴标签以提高可读性

plt.tight_layout()

# 在条形顶部添加准确率值

for i, val in enumerate(accuracy):

plt.text(i, val + 1, f'{val:.2f}%', ha='center', va='bottom')

# 将图表保存为当前目录中的JPG文件

plt.savefig(os.path.join(os.path.dirname(__file__), f'mmlu_accuracy_chart.jpg'))

plt.show()

MMLU基准准确率

MMLU基准准确率